Four decades ago, philosopher Hilary Putnam described a famous and frightening thought experiment: “a brain in a perch”, grabbed from his human skull by a mad scientist who then stimulates nerve endings to create the illusion that nothing has changed. The disembodied consciousness continues to live in a state that seems straight out The matrixseeing and feeling a world that does not exist.

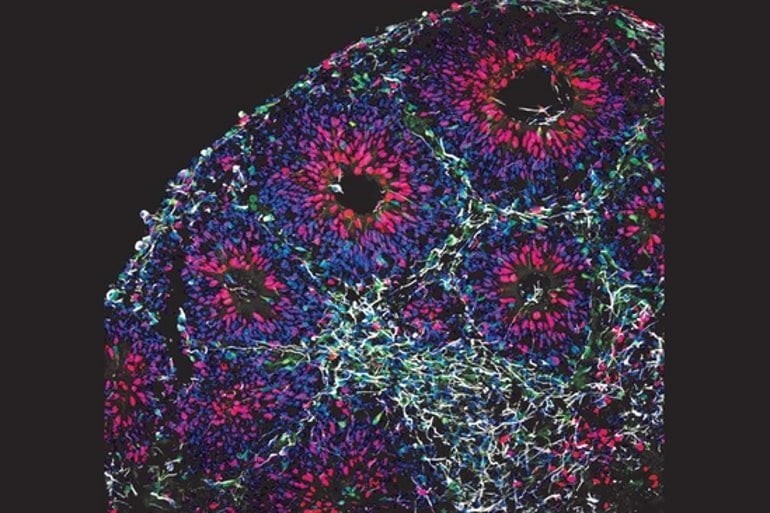

While the idea was pure science fiction in 1981, it’s not so far-fetched today. Over the past decade, neuroscientists have begun using stem cell cultures to grow artificial brains, so-called brain organoids — convenient alternatives that circumvent the practical and ethical challenges of studying the real thing.

The rise of artificial brains

As these models improve (currently they are pea-sized simplifications), they may lead to breakthroughs in diagnosis and treatment of neurological diseases. Organoids have already improved our understanding of conditions such as autism, schizophreniaeven Zika virus, and have the potential to illuminate many others. But they also raise troubling questions.

Read more: These tiny “brains” can help demystify the human mind

At the heart of organoid research is a catch-22: proxies must resemble actual brains to yield insights that could improve human life; but the greater the resemblance—that is, the nearer they come to consciousness—the more difficult it is to justify using them for our selfish purposes.

“If it looks like a human brain and acts like a human brain,” writes Stanford law professor Henry Greeley in an article published in The American Journal of Bioethics in 2020, “at what point should we treat it as a human brain—or a human being?”

The artificial brain and the problem of consciousness

In the making, scientists, bioethicists and philosophers grapple with a surreal set of conundrums: Having created these strange creatures, what moral considerations do we owe them? How do we balance the potential harm of organoids with their enormous benefit to humans? How can we even know if we are harming them?

A big part of the problem is that we can’t answer this last question—there’s no clear way to tell if an organelle is suffering. Humans and animals use their bodies to communicate distress, but a patch of neurons has no way to communicate with the outside world.

Greeley, who specializes in biomedical ethics, he says darkly in his 2020 article: “In the bathtub, no one can hear you scream.”

Read more: Do insects have feelings and consciousness?

Although researchers have identified neural correlates of consciousness — brain activity that marks conscious experience — there is no guarantee that these correlates will be the same in organoids as they are in humans. Nita Farahani, a law professor at Duke, and 16 other colleagues explained the difficulty in 2018 Nature paper.

“Without knowing more about what consciousness is and what building blocks it requires,” they write, “it may be difficult to know what signals to look for in an experimental brain model.”

Based on some experiments, it seems that artificial brains are already on the verge of awareness. In 2017, a team of scientists from Harvard and MIT recorded brain activity while illuminating the organelle’s photosensitive cells, indicating that it can respond to sensory stimuli. But such experiments do not prove—indeed cannot—prove that the organoid has any internal experience corresponding to the behavior we observe. (Another creepy thought experiment, “philosophical zombie,” highlights the fact that even our belief in other people’s minds is ultimately a matter of faith.)

Looking through the eyes of an organoid

Last year, a group of Japanese and Canadian philosophers wrote in the journal neuroethics, circumvent this difficult question generally. According to their “precautionary principle,” we should err on the side of simply assuming that organoids are conscious. This takes us beyond the intractable question of whether they have consciousness at which point we can look what kind of the consciousness they might have—a difficult question in itself, but perhaps more fruitful than the former.

The researchers argue that how we should treat organoids depends on what they can experience. In particular, it depends on “valence,” the feeling that something is pleasant or painful. Consciousness alone does not require moral status; it is possible that some of his forms are not equipped with suffering. No harm, no foul.

Read more: How will we know when artificial intelligence is sentient?

But the more similar an organism is to us, philosophers suggest, the more likely its experience is to resemble ours. So where do organoids fit on the spectrum? It’s hard to say. They share important aspects of our neural structure and development, although so far the most advanced are mainly small parts of certain brain regions, devoid of the vast network of interconnections from which human sensation arises. Most organelles contain only 3 million cells to our 100 billion, and without blood vessels to provide oxygen and nutrients, they cannot mature much further.

That said scientists can now bind independently grown organoids, each representing a different brain region to allow electrical communication between them. As these “assemblages” and their isolated components become more complex, it is possible that they have some kind of “primitive” experience. For example, photosensitive organelles such as the one mentioned above can vaguely sense a flash of light, then a return to darkness, even if the event does not trigger thoughts or feelings.

It only gets weirder and more relevant to the ethical dilemma. If an artificial model of the visual cortex can generate visual experience, it stands to reason that a model of the limbic system (which plays a key role in emotional experience) can feel primitive emotions. Climbing the ladder of consciousness, perhaps a model of brain networks underlying self-reflection can realize itself as a separate being.

An ethical framework for artificial brains

Philosophers are quick to point out that this is all speculation. Even if the organoids eventually develop into full-fledged brains, we know so little about the origins of consciousness that we cannot be sure that neural tissue would function the same way in such an alien context. And while the simplest solution would be to stop this research until we better understand the workings of the mind, the moratorium may come at a price.

In fact, some observers argue that it would be unethical no to continue working with organoids. Farahany, in an accompanying podcast Nature study says “it is our best hope to alleviate the vast amount of human suffering caused by neurological and psychiatric disorders.” By establishing appropriate guidelines, she argues, we can address organoid welfare without sacrificing medical benefits.

In 2021 Oxford philosopher Julian Savulescu Monash University philosopher Julian Coplin proposed such a framework, based to some extent on existing animal research protocols. Among other things, they suggest not creating more organoids than necessary, making them as complex as necessary to achieve research goals, and using them only when the expected benefits justify potential harm.

Read more: AI and the human brain: how similar are they?

The past few years have brought several more significant efforts to unravel the ethical implications of organoid research. In 2021, the National Academies of Sciences, Engineering, and Medicine published a whole book report on the subject, and the National Institutes of Health funds similar ongoing project through its BRAIN initiative.

The uncertainty surrounding brain organelles touches on some of the deepest questions we can ask—what does it mean to be conscious, to be alive, to be human? These are old, eternal concerns. But in light of this “looming ethical dilemma,” as it has been called, they seem particularly urgent.

Even if the prospect of organoid consciousness is remote, Farahani says, “the very fact that it’s remote, not impossible, creates a need to have the conversation now.”